New feature within the Dataiku LLM Mesh creates standards for tracking and optimizing Generative AI use cases across the enterprise

In the rapidly advancing landscape of Generative AI, the rush to harness the power of large language models (LLMs) has led to a challenge for widespread enterprise adoption: the comprehensive understanding and management of associated costs. Responding to this challenge, Dataiku today announced the launch of its dedicated cost monitoring solution, LLM Cost Guard, a new component of the Dataiku LLM Mesh.

“With LLM Cost Guard, we’re aiming to demystify these expenses. IT leaders will now not only have controlled, governed LLM access within the Dataiku LLM Mesh, but detailed, real-time control over spend, so that they can focus their time on building and innovating.”

Florian Douetteau, Co-Founder and CEO, Dataiku.

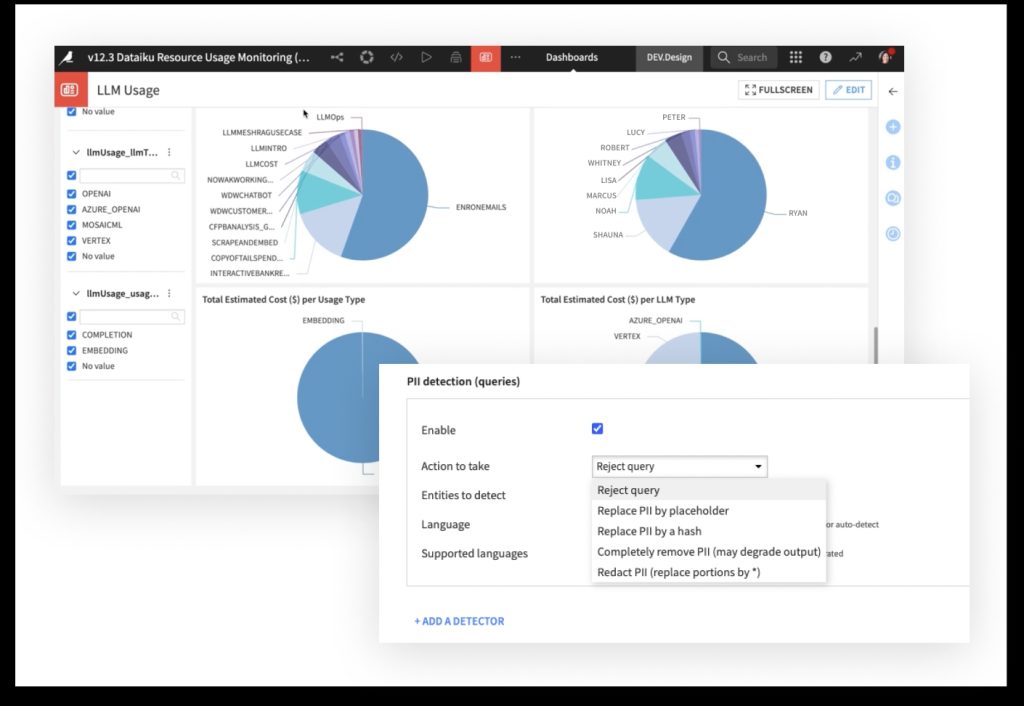

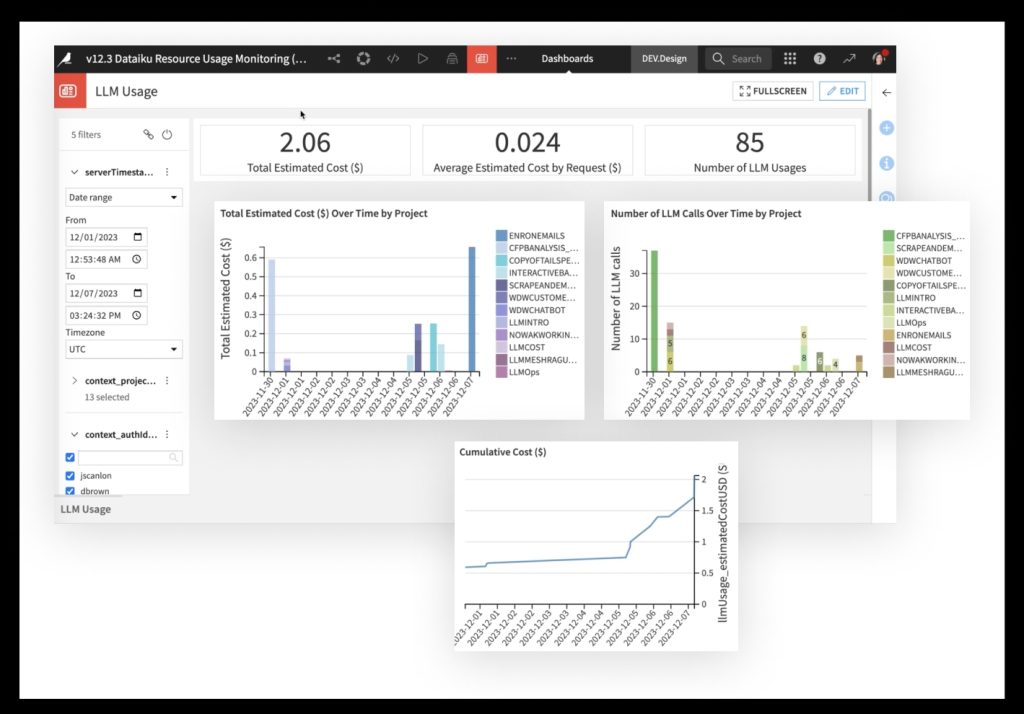

LLM Cost Guard enables effective tracing and monitoring of enterprise LLM usage to better anticipate and control Generative AI costs. It provides visibility into costs attributed to specific applications, providers, and users for a fine-grained understanding of which LLM use cases are driving what costs. LLM Cost Guard is a feature within the Dataiku LLM Mesh, which provides a secure LLM gateway and allows customers to be agnostic when it comes to LLM providers, including integration of LLMs provided by OpenAI, Microsoft Azure, Amazon Web Services, Google Cloud Platform, Databricks, Anthropic, AI21 Labs, and Cohere.

“AI is a critical strategy for every enterprise’s innovation and growth, yet we constantly hear business leaders’ concerns not only about the potential costs of Generative AI projects but the inherent variability of that cost,” said Florian Douetteau, co-founder and CEO, Dataiku. “With LLM Cost Guard, we’re aiming to demystify these expenses. IT leaders will now not only have controlled, governed LLM access within the Dataiku LLM Mesh, but detailed, real-time control over spend, so that they can focus their time on building and innovating. By distinguishing between various costs and setting early warnings, we empower leaders to move forward with their Generative AI initiatives confidently.”

Unpacking the True Cost of Generative AI

The promise of Generative AI to redefine industries has catapulted its adoption, and over half of organizations plan to increase investments within the next year. Yet, practical considerations of cost management have been overlooked as IT leaders ambitiously rush to deploy LLMs, hire skilled talent, and engineer the best prompts for quality, governance and security. With Generative AI forecasted to capture 55% of AI spend by 2030, ensuring that Generative AI initiatives are backed by a strong financial justification presents a critical area of investment.

“Dataiku’s innovation in Generative AI cost monitoring is pivotal, meeting a crucial market demand. As enterprises weave Generative AI more deeply into their operational fabric, the necessity for a solution that not only clarifies costs but also integrates governance into its framework becomes essential. Dataiku is empowering companies to pursue sustainable growth with a comprehensive understanding and oversight of their Generative AI investments,” said Ritu Jyoti, Group VP, AI and Automation Research, IDC.

The Dataiku LLM Mesh offers a centralized solution with an unparalleled breadth of connectivity to today’s most in-demand LLM providers. Today’s enterprises prefer to use an array of LLM vendors for different use cases, and LLM Cost Guard monitors and reports on insights across providers so that organizations have global visibility over their projects.

LLM Cost Guard enables enterprises to:

- Tag and Track LLM Expenditures: Assign costs to specific projects for better financial clarity and accountability.

- Differentiate Between Costs: Clearly distinguish between production and development expenses to aid strategic financial planning.

- Implement Early Warning Systems: Identify potential cost overruns early to mitigate financial risks, including those related to governance failures.

- Gain Detailed Insights: Access a dashboard of LLM usage and spending to make informed decisions and optimize investment.